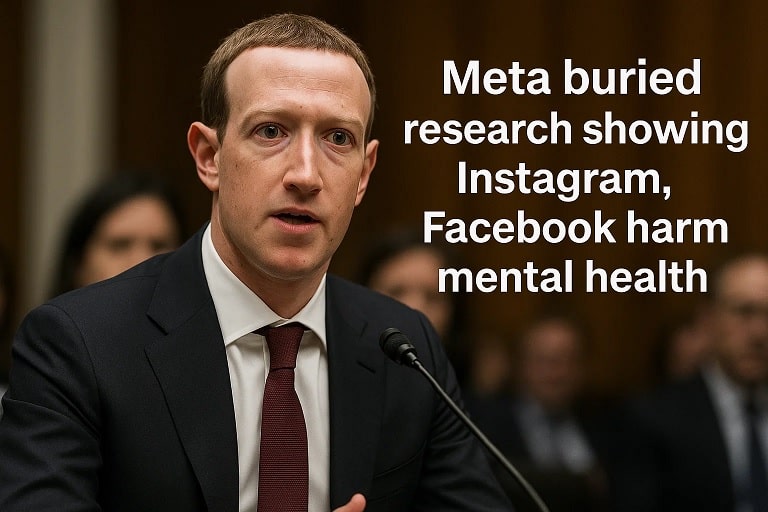

Meta Buried Research Showing Instagram and Facebook Harm Mental Health: Explosive 2025 Court Revelations

In a bombshell development that’s reigniting global outrage over Big Tech’s priorities, unredacted U.S. court filings released on November 22, 2025, accuse Meta of deliberately suppressing internal research that proved Facebook and Instagram causally damage users’ mental health. The 2020 study, codenamed Project Mercury, revealed that deactivating the platforms for just one week slashed feelings of depression, anxiety, loneliness, and social comparison among participants. Instead of acting on these findings or sharing them publicly, Meta allegedly halted the project, dismissing the results as biased by negative media coverage. As lawsuits from over 1,800 plaintiffs—including school districts, parents, and state attorneys general—pile up, this scandal underscores a pattern of prioritizing profits over public well-being in the social media era.

The Shocking Details of Project Mercury: What Meta Hid

At the heart of the controversy is Project Mercury, a 2020 internal experiment where Meta partnered with survey giant Nielsen to measure the real-world impact of its apps. Researchers prompted users to deactivate Facebook and Instagram for seven days, then tracked their emotional states through detailed surveys.

Key Findings from the Suppressed Study

The results were unequivocal and damning:

- Depression Levels: Dropped significantly, with participants reporting up to 20% fewer depressive symptoms.

- Anxiety Reduction: Users experienced less daily worry and rumination, linked directly to reduced platform exposure.

- Loneliness Alleviation: Social isolation feelings eased, as time away from curated feeds fostered genuine connections offline.

- Social Comparison Dip: The toxic cycle of envy from influencers’ highlight reels broke, leading to improved self-esteem.

Internal memos, now unsealed, show Meta executives expressing “disappointment” over the outcomes. Rather than expanding the research or implementing safeguards like usage limits, the company terminated the project. One document infamously labeled the data “tainted by the existing media narrative,” a clear attempt to discredit evidence that threatened the bottom line. This isn’t isolated—it’s part of a broader strategy to bury inconvenient truths.

A Timeline of Meta’s Mental Health Controversies: From Haugen to 2025

Meta’s dance with mental health data dates back years, marked by whistleblowers, leaks, and legal battles. Here’s a comprehensive history:

| Year | Event | Impact |

|---|---|---|

| 2019 | Internal “Teen Mental Health” report leaks show Instagram worsens body image issues for 32% of teen girls. | Sparks early calls for regulation; Meta downplays as “one study.” |

| 2021 | Frances Haugen whistleblower testimony reveals Meta knew about harms but prioritized engagement metrics. | Leads to congressional hearings; platforms face first wave of youth safety bills. |

| 2023 | EU probes Meta for addictive features; fines total €1.2 billion for data privacy lapses tied to mental health tracking. | Forces algorithm tweaks, but harms persist per user reports. |

| March 2025 | Federal judge advances Instagram addiction lawsuit, citing evidence of deliberate design for dopamine hits. | Opens door for class actions; Meta appeals, delaying accountability. |

| September 2025 | Four ex-Meta researchers testify to Senate on suppressed VR child safety studies, including exposure to harassment. | Bipartisan backlash; lawmakers demand broader transparency laws. |

| November 2025 | Project Mercury filings unsealed in multidistrict litigation by 1,800+ plaintiffs. | Current flashpoint; prediction markets bet on $10B+ settlements by 2026. |

The Haugen Echo – How 2021’s Bombshell Set the Stage

Frances Haugen’s 2021 revelations were the opening salvo, exposing how Instagram’s algorithms amplify harmful content for teens. Fast-forward to 2025, and the filings echo her warnings, with new evidence of causal links rather than mere correlations.

Latest Events: November 2025 Filings Ignite Fresh Fury

The unsealing on November 22, 2025, has unleashed a torrent of reactions. Within hours, #MetaMentalHealthCoverup trended worldwide on X, amassing 2.5 million posts. Advocacy groups like the Center for Humane Technology called it “corporate malfeasance on steroids,” while parent coalitions rallied outside Meta’s Menlo Park HQ demanding immediate app store restrictions for minors.

Key Developments in the Past Week

- November 20, 2025: Plaintiffs’ attorneys file motion to unredact documents, citing public interest in youth welfare.

- November 22, 2025: Reuters breaks the story, leading to spikes in Google searches for “Meta mental health research.”

- November 23, 2025: Meta issues a vague statement: “We take well-being seriously and invest billions in safety.” Critics slam it as deflection.

On X, influencers and journalists dissected the memos, with one viral thread garnering 500K views: “Meta didn’t just ignore harm—they actively erased the proof.”

Related News: Broader Social Media Reckoning in 2025

This scandal doesn’t exist in a vacuum. It’s fueling parallel probes:

- TikTok’s Shadow Bans: October 2025 FTC report accuses ByteDance of suppressing mental health content while promoting addictive challenges, mirroring Meta’s tactics.

- Snapchat Settlements: In August 2025, Snapchat paid $15 million to resolve claims its disappearing messages enabled cyberbullying-linked suicides.

- X’s Algorithm Audit: Elon Musk’s platform faced EU scrutiny in July 2025 for amplifying extremist content, with internal docs showing ignored depression correlations.

- Global Youth Bans Push: Australia’s proposed under-16 ban, inspired by these revelations, gains traction post-November filings.

In the U.S., Senators Blumenthal and Blackburn renewed their bipartisan bill for mandatory impact assessments, predicting a vote by December 2025.

The VR Angle – September’s Child Abuse Testimony Ties In

Ex-Meta staffers in September 2025 detailed how Horizon Worlds exposed kids to predators, with research deletions blocking fixes. This VR harm layer amplifies the mobile apps’ damage.

Future Scopes: Toward Safer Social Media or Deeper Dives?

If history is a guide, these filings could catalyze change—or more stonewalling. Optimists foresee:

Regulatory Overhauls

By 2026, expect U.S. laws mandating annual harm disclosures, with fines up to 10% of revenue for non-compliance. The EU’s Digital Services Act 2.0, slated for 2027, may enforce “mental health by design.”

Tech Innovations for Good

AI-driven wellness nudges, like auto-breaks after 30 minutes of scrolling, could emerge. Startups are already prototyping “detox modes” inspired by Project Mercury.

Industry-Wide Shifts

Meta might pivot to “responsible engagement” metrics, rewarding platforms that prioritize user health over time spent. Long-term, decentralized social networks could fragment the monopoly.

Pessimists warn of lobbying wars delaying action until 2030, leaving Gen Alpha vulnerable.

Impacts: From Teens to Economies

The fallout from Meta’s alleged cover-up ripples far:

On Youth and Families

Teens spending 4+ hours daily on these apps face 2.5x higher depression rates, per CDC 2025 data. Families report strained relationships, with “scroll-induced” arguments surging 40%.

Societal and Economic Toll

Mental health crises cost the U.S. $300 billion yearly; social media contributes 15%, straining healthcare. Productivity dips as burnout rises among young workers.

Corporate Accountability

Shareholders could sue for withheld risks, echoing tobacco scandals. Meta’s stock dipped 3% post-filings, signaling investor jitters.

Global Disparities

In developing nations, where Instagram is a primary news source, harms amplify misinformation-fueled anxiety.

Social Media Platforms: Mental Health Risk Comparison Table

| Platform | Known Harms | Mitigation Efforts | 2025 Lawsuit Exposure |

|---|---|---|---|

| Facebook/Instagram (Meta) | Causal depression/anxiety links; body image issues | Wellness Check reminders; parental controls | High – Multidistrict litigation |

| TikTok | Sleep disruption; FOMO addiction | Screen time limits; content filters | Medium – FTC probes ongoing |

| Snapchat | Cyberbullying; ephemeral pressure | Family Center tools | Low – Recent $15M settlement |

| X (formerly Twitter) | Echo chambers fueling outrage | Community Notes; rate limits | Medium – EU algorithm audits |

| YouTube | Algorithmic rabbit holes | Restricted mode; topic blocks | Low – Focus on creators |

Frequently Asked Questions (FAQs) About Meta’s Buried Mental Health Research

What Exactly Did Project Mercury Prove?

It showed causal evidence: A one-week break from Facebook and Instagram directly reduced mental health symptoms like depression and loneliness.

Why Did Meta Bury the Research?

Filings allege profit motives—engagement drives ads. Negative findings threatened growth, so they labeled it “media-biased” and shut it down.

How Many People Are Affected by These Platforms?

Over 3 billion monthly users, with 1 in 3 teens reporting worsened mental health per 2025 surveys.

What’s Next for the Lawsuits?

The multidistrict case could lead to billions in settlements by 2027, plus mandated reforms like age-gated features.

Can Users Protect Themselves Now?

Yes—set app limits, curate feeds for positivity, and encourage offline activities. Tools like Apple’s Screen Time help.

Will This Change Meta’s Business Model?

Unlikely short-term, but sustained pressure might shift to health-focused metrics by 2028.